In the world of enterprise tech, buzzwords are designed to short-circuit complexity. In 2025, terms like “Unified Data Platform,” “Generative AI-Ready,” and “Data Fabric” promise a seamless path to success. They paint a picture of an integrated, intelligent future, just a simple installation away.

While today’s cloud ecosystems are more powerful than ever, that promise often overlooks the foundational work required for success. The most common pain points in any cloud analytics or AI initiative have less to do with the new technology and more to do with the existing environment it’s connecting to.

Think of it as building a state-of-the-art smart home. You can’t install the AI assistants, automated lighting, and security systems until you’ve ensured the lot is graded, the foundation is solid, the utility lines are in place, and the permits are approved.

Before you dive into the next big platform, here are the critical “pre-work” areas to investigate.

1. Is Your Data & Infrastructure Truly Ready?

The focus has shifted from basic compatibility to holistic readiness. Before you can leverage AI, you must assess the entire data pipeline that will feed it. “Garbage in, garbage out” has never been more true.

- Hybrid and Multi-Cloud Connectivity: It’s no longer just about connecting to Azure or AWS. How will your on-premise data centers, private clouds, and other SaaS platforms securely and efficiently interact with your new analytics environment? Can your existing network plumbing and VPNs handle the sustained, high-volume traffic required for model training and large-scale queries?

- Data Quality and Lineage: Where is your data coming from? Is it clean, validated, and trustworthy? You must have a clear understanding of data lineage to ensure your AI models and analytics dashboards are built on a foundation of truth.

- Vendor and Firmware Audits: While less of a blocker than in the past, it’s still crucial. Check with your network and hardware vendors to ensure your core infrastructure isn’t running on unsupported legacy firmware that could create security or performance bottlenecks.

2. Have You Aligned the Human Layer?

Technology projects are people projects in disguise. In a complex organization, multiple teams, vendors, and departments have domain over the various pieces you’ll need. Ignoring this is a recipe for delay.

- Identify All Stakeholders: Go beyond the IT department. Map out every team involved: the on-prem infrastructure team, the cloud networking group, third-party managed service providers (MSPs), departmental data owners, and cybersecurity. Understand their individual timelines, priorities, and SLAs from day one.

- Assess the Skills Gap: Do you have the right talent in-house? The skills needed for 2025 go beyond SQL. Do your teams understand FinOps, MLOps, prompt engineering, and the specific architecture of platforms like Microsoft Fabric or Databricks? Plan for training and upskilling early.

3. Have You Mastered Cloud FinOps and Governance?

Cost management in the cloud has matured into a formal discipline known as FinOps (Cloud Financial Management). It’s about creating financial accountability for your cloud usage, and it’s non-negotiable for any at-scale deployment.

- Understand the New Pricing Models: The billing landscape is more complex than ever. Are you paying per hour, per query, per user, or based on consumption (like tokens for a Generative AI model)? Your solution will likely involve a mix of these. Work with procurement upfront to ensure your vendor agreements cover these new services and that you aren’t exposed to runaway costs.

- Empower Accounts Payable: Your AP team is likely accustomed to fixed, predictable monthly software bills. Introduce them to the concept of metered, variable billing early. Provide them with forecasts and explain why costs may fluctuate month-to-month to avoid friction later.

- Implement Cost-Saving Measures: Are you leveraging cost-saving features like reserved instances for predictable workloads or auto-scaling to shut down resources when not in use? Building a cost-aware culture and automating these practices is key to maximizing your cloud ROI.

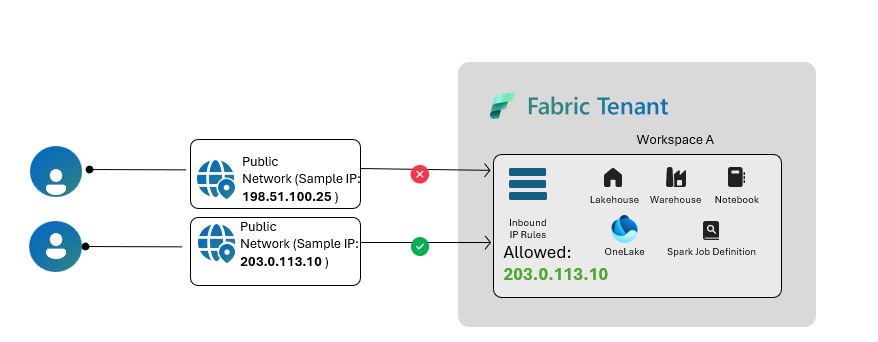

- Establish Robust Data Governance: Who has access to what data, and why? How will you manage access control as data flows into these new, powerful AI-driven platforms? Define your security policies, data masking rules, and compliance frameworks (e.g., GDPR, CCPA) before you migrate sensitive information.

The promise of AI-driven analytics is real, but it’s not magic. Its success is built on the diligent, often unglamorous, work of preparation. Don’t let the latest buzzwords short-circuit your approach. By focusing on these basics, you move beyond the hype and build a data strategy that delivers lasting value.

Author: Jim Fahrenbach